You or someone you know have undoubtedly experienced a visit to the hospital for chest pain, the birth of a baby, a severe headache, or some other unfortunate event. At some point during your visit, you’ve been hooked up to sensors, where your vitals were monitored to try and detect problems that may be occurring deep within your body that may not be easily knowable or detectable.

We’re fortunate to live in such a time. A few centuries ago, physicians were forced to diagnose patients by speaking to and observing the external appearance of the patient. The understanding and correlation of temperature to something going wrong in the body was cloudy at best. Diagnosis was limited to observation of external symptoms, and effective communication between the patient and physician. Temperatures were measured by placing a hand on the forehead.

Using Technology to Understand More

In the 1700s, the first thermometers were used to diagnose temperatures in patients. The device was a foot long and took 20 minutes to take a somewhat accurate temperature.

Austrian Anton “De Haen studied diurnal changes in normal subjects and observed changes in temperature with shivering or fever, and he noted the acceleration of the pulse when temperature was raised. He found that temperature was a valuable indication of the progress of an illness. But his contemporaries were unimpressed, and the thermometer was not widely used.”

It took well over 100 years, the invention of a more usable thermometer that was only 6 inches long and took 5 minutes to take a temperature, and the publication of over 1,000,000 temperature readings from over 25,000 patients for temperatures to be measured regularly as an indicator of health.

(Author’s Note: Most of the information above taken from The Oxford Journals)

We can now take accurate temperatures in mere seconds without any human contact. While familiar to most of us, the sensors applied in the emergency room today are still often big, bulky, and costly. In the near future, sensors will simply be persistent, more accurate, and will allow the discovery of new correlations to nanoscale bio indicators that we don’t even know exist yet.

Sensors Everywhere

But sensors are not just being applied to humans. They’re being connected to everything; Jet engines, locomotives, livestock, clothing, thermostats, cars, buildings, walls and ceilings, and ultimately just about everything else.

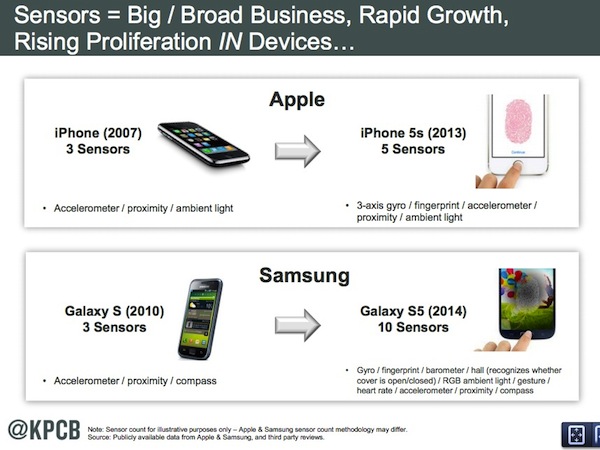

And these sensors are getting smaller and more advanced, as demonstrated by the number of sensors now embedded within our smartphones.

The value of these sensors is in the data that they gather and store. But this introduces 5 key questions:

– What sort of data will we be getting?

– How will we store the data?

– Where will be store the data?

– How will we transport the data?

– How will we analyze the data?

And this is where the simultaneous advances of several technology domains are converging. Broadband and cloud computing are becoming more ubiquitous and providing easy, affordable, and accessible answers to the questions above regarding the transportation and destination for all of this sensor data.

Storage and Computing Costs “Racing to Free”

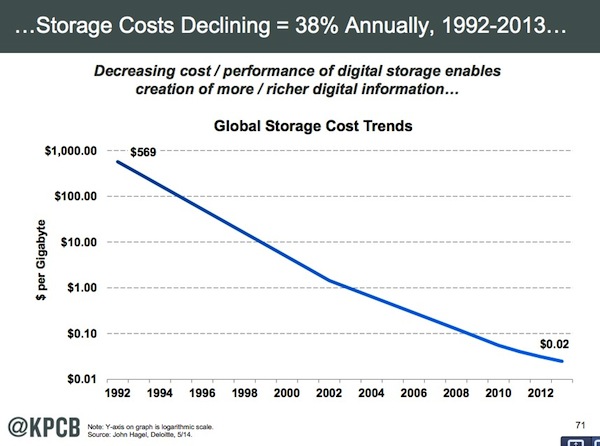

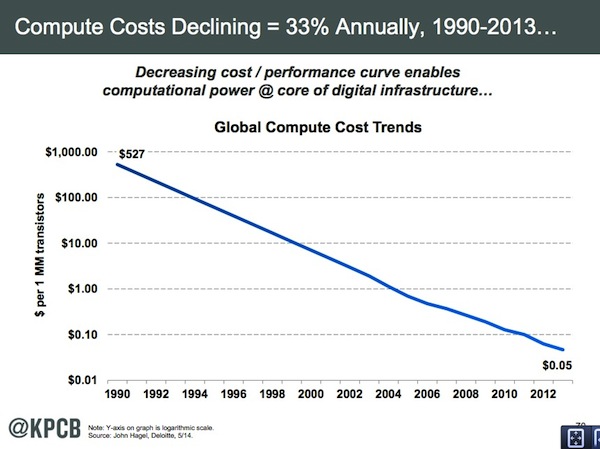

The other critical pieces to the development of the Internet of Everything are storage and processing power.

It is the advances in availability of these two fundamental building blocks that is enabling the exponential changes happening all around us. The near 25,000% decrease in the cost of storage and processing power over the past two decades is allowing us to connect our world at an ever granular level. See the charts below (courtesy of Mary Meeker and John Hagel).

This exponential pace of change is putting pressure on every organization, and impacting significant change on how humans interact with other humans and the machines that they’ve constructed.

Doctors ultimately learned and discovered that a fever was often an indictor of the body’s immune system kicking in. As science progressed, it was then learned that fevers are generated by the hypothalamus, which sits at the base of the brain, detecting elevated levels of pyrogens in the bloodstream and telling the body to retain them. Understanding more about how the body works has triggered new approaches and advances in medicine. However, what was revolutionary in 2013 will be archaic in 2023. Nanomedicine, genome sequencing, artificial DNA, and a host of other pioneering fields are set to revolutionize the way that we view healthcare.

The same is true for every discipline. In domains free from regulatory control, and deeply entrenched bureaucracy, the changes will come quicker.

Jumping the Titanic for a Speed Boat

While there will undoubtedly be froth attached to this trend, those individuals and organizations that are able to gain a deeper understanding of their customers, their products, their supply chain, and the worlds and ecosystems that evolve around each of them will be far more prepared to make the most advantageous decisions.

The organizations who lag will be the equivalent of reading decade old textbooks for information in the era of social networks. They will be like the Native Americans competing with bows and arrows against gun powder.

Like ice grows and expands in the Winter, the digitization of everything is sweeping across our world. Like a seemingly benign iceberg, it is simultaneously rendering a cruel and ruthless attack on unsuspecting titanic-esqe organizations sailing happily through the night, and providing a limited amount of speed boats to those aware and ambitious enough to explore an exciting new world.

This post is brought to you by InnovateThink and Cisco.

[…] The Digitization of Everything: Jumping the Titanic for a Speed Boat […]